1. Introduction

As a result of the COVID-19 pandemic, educational institutions had to modify their pedagogical strategies. The most immediate effect on students was the suspension of face-to-face instruction. This placed them in an entirely new situation and caused uncertainty regarding several aspects: the duration of the changes, the impact on their daily lives, and the continuity of their education. Instructors also encountered significant disruptions, especially when it came to teaching virtually. The effect of this turbulence was more pronounced in institutions of higher education (IHEs), where Science, Technology, Engineering, Mathematics, and Medicine (STEMM) courses are prevalent, and laboratory work is an essential component of the curriculum. Research has shown that hands-on practice is vital in STEMM education (Hofstein & Lunetta, 2004; Hofstein & Mamlok-Naaman, 2007; Lunetta et al., 2007; Ma & Nickerson, 2006; Satterthwait, 2010; Tobin, 1990). With the specific aim of integrating theory with practice, lab courses had to be adequately developed and designed to make this integration as effective for learning as possible. Since classes and labs could no longer meet in person, educators had to create or adopt new innovative tools, approaches, and teaching methodologies as they moved to online platforms (Cuaton, 2020; Ferdig et al., 2020; Kaup et al., 2020; Neuwirth et al., 2021; Pace et al., 2020; Toquero, 2020). Not all IHEs had strategies to ensure the continuity of teaching activities; this was especially true for the lab courses, as they require extensive hands-on participation, which is difficult to achieve in an online environment.

Internet and computing technologies have transformed traditional instructional laboratories, facilitating virtual and remote experiments using interactive learning tools, facilitating collaboration through digital platforms, and allowing for personalized learning experiences, thus making learning more flexible, accessible, and engaging beyond traditional lab boundaries (Feisel & Rosa, 2005). Previous research has demonstrated the effectiveness of such online labs in achieving student learning outcomes that are equal to or better than those of traditional in-person labs (Brinson, 2015; Faulconer & Gruss, 2018; Reeves & Crippen, 2021). However, these studies are yet to explore the effectiveness of transitioning these labs to online platforms to support remote instruction and ensure academic continuity during the COVID-19 pandemic. This systematic literature review identifies the challenges faced by the IHEs in transitioning to the online platform and how they adapt to the changes during the COVID-19 pandemic.

Online labs can be defined as instructional labs in which students and equipment are not located in the same physical space (May, Alves, et al., 2023). Online labs have long been considered as an alternative for implementing laboratory experiences in STEMM fields. However, to ensure that students receive an effective learning experience, it is important to carefully evaluate online labs’ pedagogical and curricular value compared to traditional, in-person labs (May, Morkos, et al., 2023). There are several approaches to conducting online labs: Remote labs, Virtual labs, and Video-based labs. A remote lab is conducted remotely through the Internet, where the actual components or instruments used in the experiment are located separately from the control or execution site (Gamage et al., 2020). Virtual labs utilize virtual reality and computer-based simulation tools (Gamage et al., 2020). Video-based labs provide students with a detailed overview of a real lab so that students can visualize the whole experimental process and its environment (Gamage et al., 2020). Alternatively, labs can also be conducted by providing students with lab supply kits or utilizing equipment available at their homes, known as home labs (Liang et al., 2020). Unlike remote labs, which involve real equipment and experiments located at different facilities, home labs utilize equipment with students in the same location, typically at their homes. Home labs can be facilitated using everyday kitchen utensils, instructor-assembled kits, or commercial lab kits (Jeschofnig & Jeschofnig, 2011). For clarity and ease of understanding in this review, we have consistently used the term “online labs” throughout the manuscript to describe all the labs conducted either entirely or in part on an online platform. However, we have clearly outlined the strategy or type of technology utilized in these online labs.

Our specific objectives were to identify the strategies used by IHEs to transition to online labs, understand the effectiveness of these online lab courses, identify the perception of students and instructors regarding these online labs, and explore the challenges faced by instructors and students during the transition. Based on our objectives, the following research questions (RQ) were proposed:

RQ 1: What strategies and technologies were used by IHEs to deliver online labs?

RQ 2: How effective was the transition from in-person to online platforms?

RQ 3: What challenges did IHEs face in addressing the barriers caused by the pandemic?

In this review, Section 2 outlines the methodology for selecting articles based on the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) guidelines. Section 3 examines the strategies and technologies utilized during the transition, the effectiveness of the transition to online platforms, and the challenges encountered by IHEs in this process. In Section 4, we present an online lab design and implementation framework to facilitate the shift of lab courses to remote delivery, applicable across various domains.

2. Methodology

PRISMA systematic literature review framework (Moher et al., 2011) was used to search for research articles involving digital technologies and online labs implemented across IHEs during the transition from in-person to online platforms during the COVID–19 pandemic.

2.1. Information Sources

For this literature review, a broad search was conducted for articles in the Education Resources Information Center (ERIC) and ProQuest, as these provided extensive, multi-disciplinary results within STEMM fields.

2.2. Eligibility Criteria

Each article had to fulfill specific criteria to be included in this review. Specifically, the studies had to be conducted in the STEMM fields and written in English. They had to focus on the adaptations and strategies used by educational institutions during the transition from in-person to online instruction. This review excluded articles not written in English, conference proceedings, letters, and review articles that did not explore STEMM education and online learning.

2.3. Search Strategy and Outcomes

The research team considered and refined various search terms appropriate for the research questions. The keywords listed in Table 1 below were combined using Boolean operators (and/or):

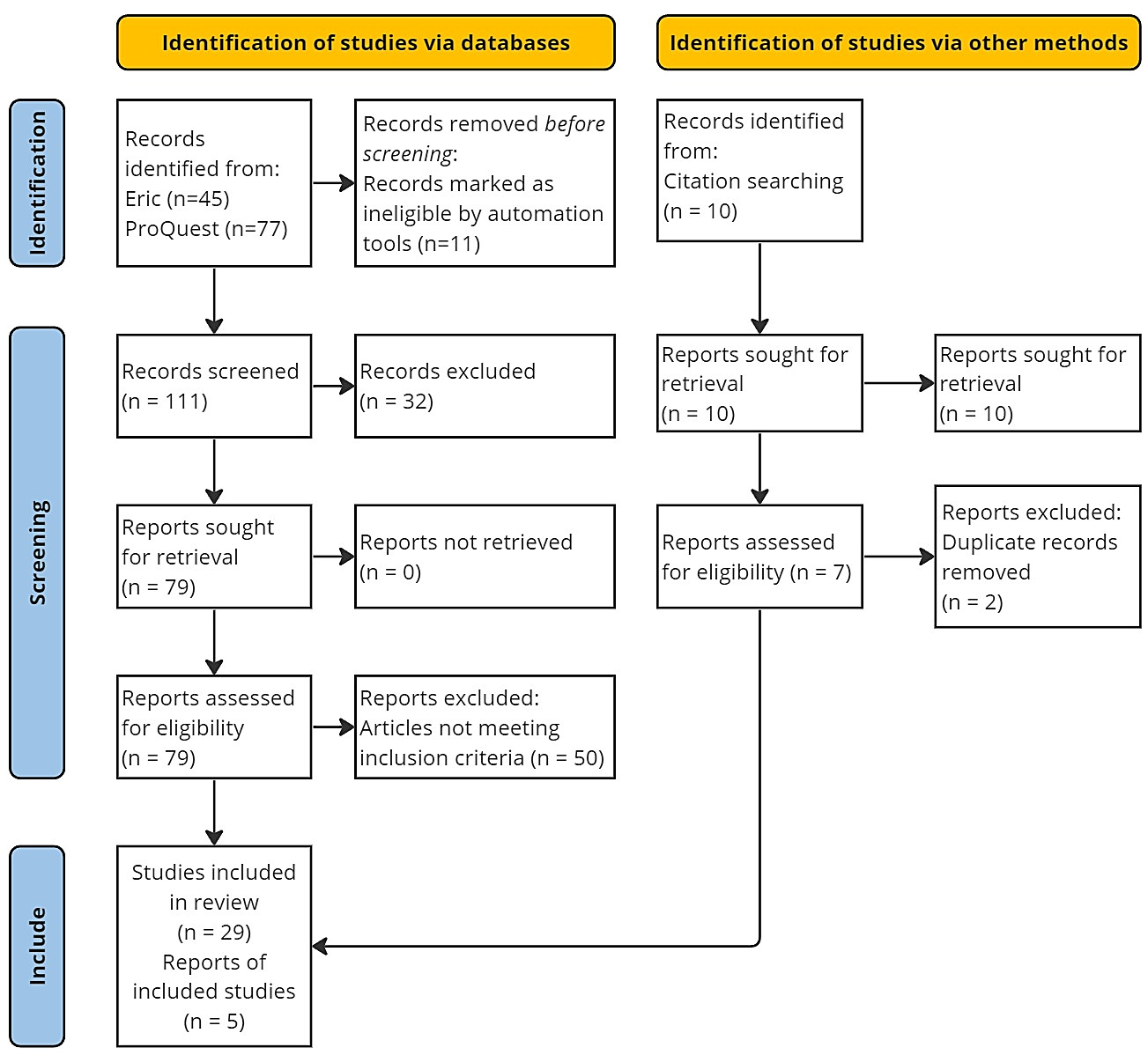

111 articles were divided among all three researchers for title and abstract screening based on the inclusion and exclusion criteria. The title and abstract screening resulted in 79 articles. The three researchers then independently reviewed 79 full-text articles and excluded 50 articles for not meeting the inclusion criteria. This final screening resulted in retaining 29 articles. An additional ten articles were selected from the references of these 29 articles, which were finally selected. These articles were then screened, with seven satisfying the inclusion criteria. After removing two duplicate articles, 34 articles were selected for this literature review, as shown in Figure 1.

2.4. Data Abstraction and Synthesis

The research team extracted data from the selected articles, conducting a detailed examination and validation process. Once the team carefully collected the data, the results were organized thematically to provide more detailed information. The data is presented in Appendix A (Author/Journal, Place/Domain, Study Design, and Measures).

3. Results

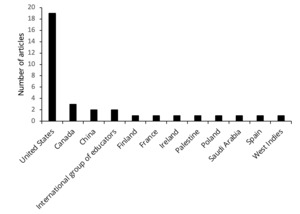

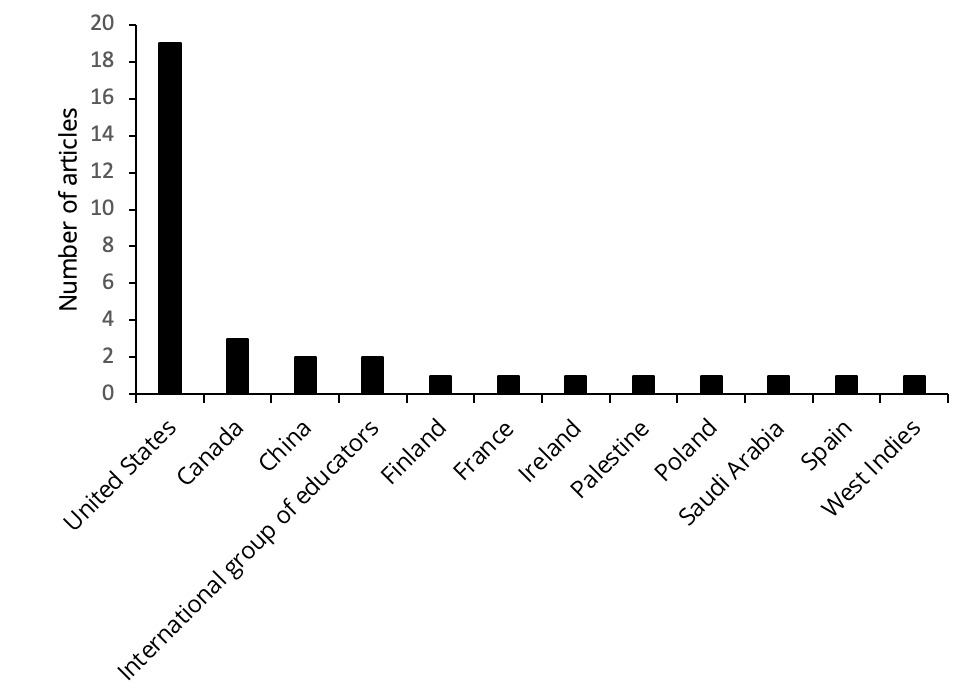

Of the 34 articles selected for this review, 19 studies were conducted in the United States, three in Canada, two in China, and one each in Finland, France, Ireland, Palestine, Poland, Saudi Arabia, Spain, and the West Indies, and two studies involved a team of educators from different countries (Chang et al., 2021; Choate et al., 2021), as shown in Figure 2.

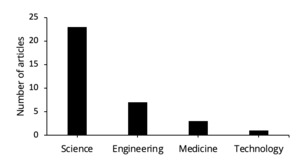

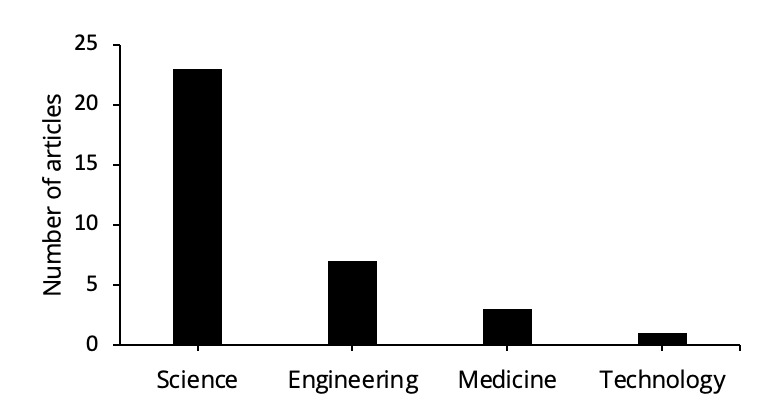

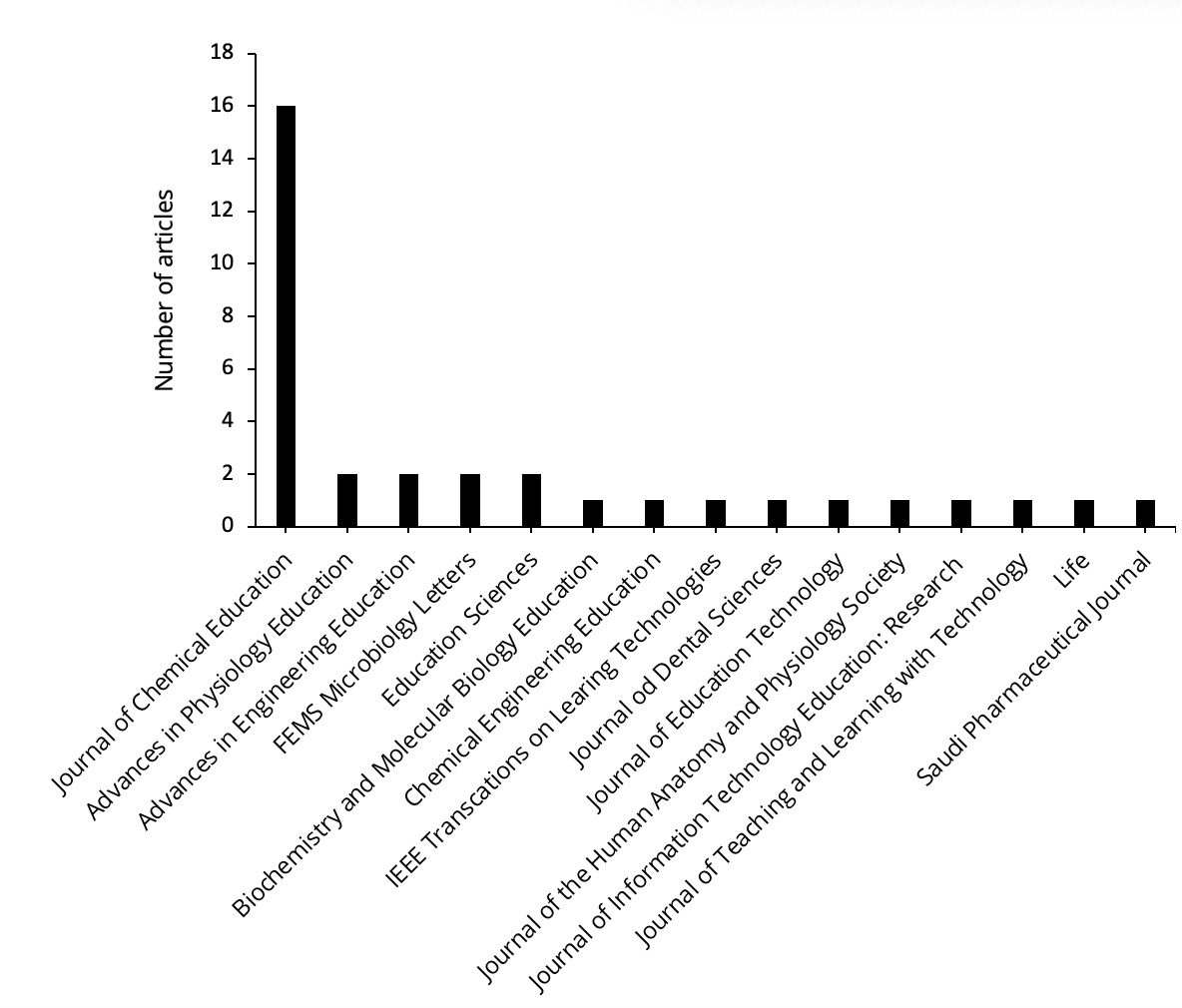

The articles reviewed in this study were sourced across four domains from the STEMM fields. Of the 34 studies reviewed 67.64% were from science, 20.58% from engineering, 8.82% from medicine, and 2.94% from technology, as shown in Figure 3. Of the 23 studies conducted in the science domain, 16 were from chemistry, six from biology, and one from physics.

Since most of the articles reviewed in this research were from the chemistry domain, 16 publications were identified from the Journal of Chemical Education, as shown in Figure 4.

3.1. Strategies Used in the Transition of Labs

The quick transition of labs to the virtual environment due to the pandemic was a complex process, requiring careful planning, development, and coordination. This systematic review delved into the different methods employed by IHEs to deliver lab courses remotely to determine their effectiveness in knowledge acquisition and experimental skills developed. During the review, it was found that video recordings, desktop simulations, and home labs were the most widely used across the various domains of STEMM fields. Pre-recorded videos were the most frequently used (45%), followed by desktop simulations (25%), home labs (6%), and live-streamed videos (6%). Other pedagogical techniques included remote programming labs (4%), an online panel format (students divided into tutorial and learning teams) (2%), analysis of previous data (2%), a remote titration unit (2%), an online learning platform with data acquisition equipment (2%), and a visual tutor (online learning tool introduced in the digital electronics course) (2%). Two studies specifically suggest strategies that were implemented during the later phase of the pandemic (4%) (Hamed & Aljanazrah, 2020; Koort & Åvall-Jääskeläinen, 2021). Both synchronous and asynchronous modes, either alone or in conjunction with each other, were used across all strategies explored. The consolidated list of strategies used in the identified articles is provided in Table 2.

Instructors created videos by recording their lab demonstrations, which are referred to as pre-recorded videos. Of the 23 studies that focused on video recordings, 11 examined pre-recorded videos (Almetwazi et al., 2020; Buchberger et al., 2020; Chierichetti & Backer, 2021; Choate et al., 2021; Davy & Quane, 2021; Franklin et al., 2021; Leung et al., 2020; Ożadowicz, 2020; Petillion & McNeil, 2021; Tran et al., 2020; Wasmer, 2021). However, some institutions relied on commercially available (Anstey et al., 2020; Hamed & Aljanazrah, 2020; Huang, 2020; Lacey & Wall, 2021; Liang et al., 2020; Wang & Ren, 2020; Zhou, 2020), open online sources such as massive open online courses and YouTube platforms (Anzovino et al., 2020; Choate et al., 2021; Dukes, 2020; George, 2020; Jones et al., 2021), when they were unable to record the lab activities before their schools were locked down. These commercial and YouTube videos were also used as supplementary instructional materials along with pre-recorded videos. For example, if students wanted to learn more about lab safety and cleaning while conducting chemical experiments, they were provided with YouTube links demonstrating these activities in detail. Learning using recorded videos has immense promise in laboratory-based fields, where brief video clips can effectively illustrate complicated or complex processes (Dash et al., 2016). These video recordings helped teach students about the operation of the instruments and observing the experiments. However, the videos lacked interactivity as students became passive observers unable to engage in hands-on activities.

Unlike video recordings, live-streamed videos included an instructor conducting real-time experiments in a physical laboratory. This strategy was primarily used in labs teaching chemistry, involving handling glassware and equipment, waiting for reactions, and recording observational data. Students could verbally interact with the instructor and, thus, participate in the experiment (Davy & Quane, 2021; Petillion & McNeil, 2021; Woelk & Whitefield, 2020). As the live-streamed sessions were intended to improve the lab experience, the instructors had to ensure they used high-resolution cameras to capture the salient features of the experiment (Davy & Quane, 2021). The instructor also interacted with students by eliciting discussions about the experiment. Students were also encouraged to make observations and record the data their instructor reported during the sessions (Woelk & Whitefield, 2020). In addition, live-streamed videos allowed the students to observe issues or unexpected results and to participate in developing solutions to address these errors (Petillion & McNeil, 2021). As a result, students were actively involved in the process in real time through verbal interactions and observations. For example, students could determine the drop rate of the burette and whether to stir the sample when titrating through live interactions (Davy & Quane, 2021).

Instructors used simulations as an alternative strategy for implementing online labs where students could interact with these simulations using a computer or a smartphone. Through these virtual lab simulations, students participated in lab experiments or modeled physical phenomena (Fox et al., 2020). They completed all these steps by interacting with the models of the experiment on their computers or smartphones (Jones et al., 2021). For instance, in a simulated chemistry Buffers lab, students created a buffer (an acid-base pair), prepared the solutions, and collected and independently analyzed the data obtained by measuring the pH values of all solutions (Jones et al., 2021). These students could experience working on the experiment by adjusting the apparatus, pouring solutions, and measuring the variables by systematically controlling the simulation platform from their computers. However, students could not gain genuine hands-on experience through these simulations, which is important in STEMM fields. Few studies made the simulation labs more engaging by adding supplement videos that were either pre-recorded or from commercial platforms (Aguirre & Selampinar, 2020; Hamed & Aljanazrah, 2020; Jones et al., 2021; Liang et al., 2020; Wasmer, 2021).

Unlike video recordings and simulations, instructors assigned home labs to provide students with hands-on activities (Ediger & Rockwell, 2020; R. Gao et al., 2020; Liang et al., 2020). These labs used readily available smartphones/laptops and software, kitchen utensils, common materials/ingredients found at home, and inexpensive lab kits mailed to the students. For example, a mechanical measurements engineering course used heating devices like electric and gas stoves at students’ homes to investigate the characteristics of thermocouples (Liang et al., 2020). While home labs could replicate some lab experiences, not every lab could be replicated at home. Some labs required expert supervision, whereas others utilized expensive, specialized equipment that students could not access at home.

Two studies have investigated substituting practical lab work with alternative approaches, such as online panel formats (J. Gao et al., 2021) or replacing laboratory work solely with the analysis of previous data (Dietrich et al., 2020). The online panel format divided students into tutorial and learning teams (J. Gao et al., 2021). Tutorial teams consisted of students who had already finished the in-person lab activities while learning groups consisted of students who had not performed them. This format implemented a team-based approach where tutorial teams created mini-lessons that provided specific and in-depth information about the experiments for the learning teams. This strategy leveraged skills taught before distance learning to enhance learning during the pandemic.

Two studies explored the use of remote programming labs, primarily in engineering. For example, an engineering dynamics system lab used a machine learning module focused on basic statistics, data analysis techniques, and machine learning concepts using MATLAB live script. MATLAB live script is an interactive document created by MathWorks® to combine codes and output/graphics with texts and equations (Nevaranta et al., 2019). The instructors provided interactive materials that allowed students to conduct experiments with available resources like smartphones to gather motion data to classify human activities and make subsequent analyses using machine learning algorithms (Leung et al., 2020). On the other hand, a software engineering lab used a remote lab infrastructure that included an open-source computer monitoring system (Veyon), a virtual private network, remote lab scripts for restarting and installing the remote labs gathering information about all lab attendants, and a Web conferencing platform (Garcia et al., 2021). The monitoring system allowed instructors to monitor students’ computers and even lock them when needed while conducting exams. The web conferencing system facilitated the synchronous delivery of remote labs. Instructors could explain the lab tasks, engage with students through audio and chat, have one-on-one conversations, and share lab resources. Additionally, the system allowed instructors to receive student uploads and utilize screen sharing and whiteboard features. After the instructor explained the programming problem to the students using a Web conferencing platform, the students worked on the lab activity while being monitored (Garcia et al., 2021).

Other strategies used by instructors to replace in-person lab experiences were a remote titration unit, an online learning platform with data acquisition equipment, a visual tutor, and hybrid in-person and online labs. A remote titration unit utilizing a Raspberry Pi architecture equipped with a webcam and a servo motor allowed students in a chemistry lab to control the experimental unit remotely (Soong et al., 2021). This procedure included selecting the titrant volume to be added to the solution and monitoring the titration progress via the webcam. This approach allowed students to participate remotely in a true laboratory setting. Additionally, this remote lab configuration had the potential to solve accessibility issues by enabling students to engage in a laboratory activity in an environment suitable for their learning requirements.

Instructors used the Lt® online learning platform for an anatomy and physiology lab delivery (Stokes & Silverthorn, 2021). This platform integrates pre-made online lectures with computer data acquisition hardware and transducers (used to collect physiological data), questions, background material, hardware configurations, and data analysis tools. To align with their course’s learning objectives more effectively, instructors can modify the lessons by adding or removing content. The platform offers a wide range of question types with features such as suggestions, immediate feedback, multiple attempts, and automated grading. Students can engage in the lessons individually or in small groups.

A digital electronics visual tutor was employed in an electrical and computer engineering course to enhance the learning experience for introductory digital electronics topics. Visual Tutor is an online learning tool that offers interactive modules that cover various concepts in the introductory digital electronics course (George, 2020). Additionally, the tutor provides a variety of mock quizzes to assess understanding and a newly developed course book tailored specifically for the course (George, 2020).

The strategies discussed thus far were implemented early in the pandemic when institutions were closed completely, and academic continuity was maintained using remote approaches. However, one study explored the remote partner model introduced in the bacteriology and mycology lab for an infection microbiology course later in the pandemic, specifically from December 2020 to March 2021 (Koort & Åvall-Jääskeläinen, 2021). This lab consisted of introduction and summary lectures on Zoom and SafeLab online self-study modules combined with hybrid in-person/online experimentation. Students worked in pairs in this model, one working virtually online and the other physically in the lab. The students took turns to ensure that all were allowed to work in the lab. Students who experimented in the in-person lab were given physical instruction. At the same time, the remote partner used Zoom to record observations, perform calculations, and draw conclusions about the experiments. Online video recordings were provided to students to help them comprehend the fundamental ideas (Hamed & Aljanazrah, 2020). At the same time, simulations were incorporated to give students the experience of working on the experiments virtually before experimenting in the in-person lab (Hamed & Aljanazrah, 2020).

3.2 Effectiveness in the Transition of Labs

Studies evaluating the impact of educational tools and technology focused on two main aspects: the effectiveness of the tool in teaching students and the user’s experience with the system (Jenkinson, 2009). Efficacy assessments primarily measure knowledge acquisition, while usability studies focus on the system’s usability, irrespective of its contribution to meeting learning objectives. The researchers evaluated the effectiveness of these strategies identified in section 3.1 as IHEs transitioned to online platforms by investigating student learning outcomes and instructor/student satisfaction/perceptions across the various online labs.

3.2.1. Learning Outcomes

Of the 34 articles reviewed, only 15 evaluated learning outcomes attained across online labs using assessment tools like pre and post-tests, assignments, exams and quizzes, and task safety and performance. Of the 15 articles that evaluated learning outcomes:

-

Three studies examined labs that integrated video recordings and desktop simulations.

-

Two studies focused on labs that used only video recordings.

-

Two studies looked at labs that were conducted using home labs.

-

Two studies explored labs that used a hybrid approach.

-

One study each investigated labs using desktop simulations, live stream videos, remote programming labs, online panel formats, learning platforms with data acquisition equipment, and visual tutors with video recordings.

Online labs that integrated video recordings and desktop simulations: The studies that included both videos and simulations identified that the learning outcomes achieved by the students remained the same after the implementation of online labs. One study assessed learning outcomes by comparing the average scores of the final tests conducted on the online platform to the scores from the previous year’s in-person chemistry labs (Aguirre & Selampinar, 2020). To accomplish this, the experimental study used the same final examination questions from an earlier semester and then analyzed and compared the outcomes. One study compared pretest and posttest scores within the online lab context teaching general chemistry (Jones et al., 2021). In another study, student performance was analyzed in two distinct virtual labs that focused on laboratory experiments related to circuits (Liang et al., 2020). These labs utilized a remote-controlled platform called ELF-BOX3 and an open-source virtual breadboard called Breadboard Simulator. After completing their labs on the online platform, students were required to write lab reports. The average score of these reports was analyzed and found to be greater than 80%.

Online labs that implemented video recordings: Labs implemented using video recordings have also achieved learning outcomes similar to traditional labs (Davy & Quane, 2021; Tran et al., 2020). The post-lab assessment scores achieved during remote delivery were compared to those obtained during in-person lab sessions in an organic chemistry course (Tran et al., 2020). Various components were considered in the organic chemistry in-person lab assessments, including prelab quizzes, lab completion, post-lab assignments, and exams. Usually, the lab’s completion and the reaction product’s yield would contribute 25% to the overall grade. However, due to the transition to remote learning, adjustments were made to the grading system. The study reassigned the 25% weightage to new post-lab assignments to address the absence of laboratory work and product yield. This study found that remote assignments primarily focused on examining and evaluating the results rather than completing the lab. As a result, these assignments emphasized scientific concepts more than practical skills, leading to an increased post-lab assessment score (Tran et al., 2020). In a study conducted on a titration lab and a Synthesis and Purification Lab Exercise in an undergraduate chemistry course, pre-COVID learning outcomes were compared with labs delivered by video recordings (Davy & Quane, 2021). After completing the online labs, student performance was assessed in several areas: making and recording observations, performing relevant calculations, making decisions about the proper endpoint, assessing the quality of the results, deciding if results were not adequate, processing and analyzing samples, and experiencing the analysis of real-world samples. Instructors then analyzed and compared which learning outcomes were achieved on the online platform and traditional in-person labs.

Online labs that implemented home labs: One study included home labs covering topics such as thermocouples’ operating characteristics, determining strain in cantilever beams, and using accelerometers to measure dynamic mechanical systems. Upon finishing their labs online, students had to submit lab reports. The analysis of the average score of these reports revealed that it was over 80% (Liang et al., 2020). One study examined student performance in an introductory chemistry course (R. Gao et al., 2020). Students submitted lab reports after performing the copper chemistry experiment using a kitchen chemistry lab. These reports were then analyzed and compared with lab reports from the previous year. This comparison of the lab reports showed that students received higher grades using the kitchen chemistry lab. However, it should be noted that these observations are derived from a single kitchen chemistry lab and should not be considered a comprehensive assessment of kitchen chemistry experiments.

Online labs that implemented a hybrid approach: A study of a remote partner model that utilized both onsite and online platforms found that the practical exam scores for a microbiology course were similar between the pre-pandemic and pandemic periods (Koort & Åvall-Jääskeläinen, 2021). Towards the end of the lab, teachers evaluated students’ proficiency in hands-on microbiological laboratory skills through a practical exam. During this exam, students worked in pairs and were tasked with identifying an unknown bacterium. The results obtained from this practical exam were compared to those from previous years (2018-2020) when traditional on-site laboratory learning was the norm. The results indicate that the combination of in-person and online lab platforms worked well together, making it a good option for lab classes with limited capacity for in-person attendance (Koort & Åvall-Jääskeläinen, 2021). Another study also identified no significant differences in students’ level of achievement in a physics lab between an experimental group (using videos and simulations for theoretical presentation followed by in-person practical work) and the control group attending traditional method (face-to-face theoretical presentation followed by practical work) (Hamed & Aljanazrah, 2020). Data on students’ performance during the hands-on experiments in the real lab were collected through observation. Both the experimental and control groups were observed while conducting the experiments. During the practical exams, the observation was focused on several aspects. These included the students’ grouping and their discussions, the level of support provided by teaching assistants (including the type and amount of help), the support received from peers, the ability to identify the required equipment and construct experiments, the proficiency in collecting data and conducting experiments within the expected timeframe and pace, as well as students’ body language (Hamed & Aljanazrah, 2020).

Online labs that implemented desktop simulations: One study presented data demonstrating improved learning outcomes across labs incorporating simulations (R. Gao et al., 2020). Students completed the online lab using McGraw Hill’s LearnSmart Lab® series, which allowed them to perform experiments using virtual simulations and complete multiple-choice or matching questions to gain conceptual knowledge. After completing a LearnSmart Lab session, students received a performance report covering experimental operation and conceptual understanding. These performance reports were compared with those from the previous year’s in-person labs. This study identified that students’ performance in virtual biochemistry laboratories in the spring of 2020 was nearly identical to that of students in comparable in-person activities in the fall of 2017 (R. Gao et al., 2020). The study claimed that the enhanced learning outcomes may be attributed to the capability of configuring and personalizing simulations to facilitate student learning. This approach enabled students to repeat their experiments multiple times, allowing them to achieve satisfactory results before submitting their final reports.

Online labs that used live streaming videos: In a study examining a titration lab and a Synthesis and Purification Lab Exercise in an undergraduate chemistry course, pre-COVID learning outcomes were compared with those from labs conducted using live streaming videos (Davy & Quane, 2021). While actively involved in the live streaming session, student performance was evaluated in various areas, including making and recording observations, performing relevant calculations, determining the correct endpoint, assessing result quality, deciding if results were inadequate, processing and analyzing samples, and analyzing real-world samples. Instructors then compared the achievement of these learning outcomes in the online platform and traditional in-person labs. A comparison was also made to analyze the delivery of pre-COVID-19 learning outcomes using video recordings and live-stream videos. It appeared that live stream delivery achieved more learning outcomes than recorded videos.

Online labs that used remote programming: A study conducted in a software engineering lab solving programming problems found that student grades over nine years (2011-2020) remained unchanged within a 95% confidence interval (Garcia et al., 2021). The main goal of this remote lab was to address programming problems by utilizing different language features and paradigms. This result suggests that a synchronous remote lab is effective for courses that can be completed on computers that do not require basic experimental skills of other STEMM domains.

Online labs that used online panel format: In a chemical engineering lab, implementing an online panel format involving teaching and learning teams revealed that students’ performance remained consistent with previous years. After completing the online lab, students submitted both oral and written lab reports, which were then analyzed and compared with lab reports from previous years. As a result of the change in teaching approach, the grading rubrics were adjusted to align with the modified course structure. The student’s primary focus was demonstrating a comprehensive understanding of fundamental concepts and exhibiting effective communication and teamwork skills (J. Gao et al., 2021).

Online labs that used learning platforms with data acquisition equipment: In a study conducted in the anatomy and physiology lab, students’ performance on laboratory reports for virtual laboratories was negatively affected (Stokes & Silverthorn, 2021). In-person laboratories had assessments for individual effort (pre-laboratory work) and group work (group quiz, data analysis, and laboratory report). Virtual labs had prework and the laboratory report combined into a single lesson that could be completed anytime. These virtual labs did not include group quizzes, and participation in the instructor-led virtual session was optional. Overall, students performed better in in-person or familiar format labs (e.g., virtual microscopy) compared to the virtual endocrine lab, with average scores exceeding 19 out of 20 possible points. On average, the virtual labs received lower scores, with a particularly significant decline observed in the endocrinology lab. Student comments indicated that the solitary online format made the virtual labs seem more difficult. Interestingly, when endocrinology lab grades were analyzed based on how students chose to complete the lab, those who participated in the interactive online session with the instructor performed better than those who completed the lab independently (Stokes & Silverthorn, 2021).

Online labs that used visual tutors with video recordings: The student performance in final examination quizzes in the electrical and computer engineering course, utilizing the digital electronics visual tutor, remained consistent with the performance observed in previous in-person sessions (George, 2020). Students had to take online quizzes after completing the labs on the online platform. These quizzes consisted of structured essay-type questions on the MyElearning course page, where students had to provide solutions in designated fields. Students were allowed multiple attempts at the quizzes, which were manually graded upon completion. The quiz grades were then compared with student performance grades collected over a period of five years.

In general, while the studies reviewed here indicate that learning outcomes can be achieved online, care needs to be taken to identify the purpose of the lab and the most effective strategy for achieving it. Moreover, studies reviewed in the literature primarily focused on the cognitive domain of learning. Objectives related to instrumentation models, experiment design, data analysis, and design are categorized into the cognitive domain (Feisel & Rosa, 2005). The review indicates that labs involving basic experimental skills are more effective if incorporated into the online environment via simulations, live stream videos, and home labs rather than relying only on recorded videos.

3.2.2. Student and Instructor Perceptions

A second aspect of identifying the effectiveness of online labs involved the perceptions of both the instructors and students using them. Of the 34 articles reviewed, 23 evaluated student and instructor perceptions across online lab configurations using surveys, observations, and interviews. These studies incorporated video recordings (6 studies), live-streamed videos (3 studies), simulations (2 studies), a combination of videos and simulations (3 studies), home labs (2 studies), remote programming labs (2 studies), hybrid in-person and online (2 study), online panel format (1 study), online learning platform with data acquisition equipment (1 study), and visual tutor (1 study).

Online labs that used video recordings: Six studies have investigated the efficacy of video recordings in facilitating online laboratory sessions through the use of surveys (Anstey et al., 2020; Franklin et al., 2021; Lacey & Wall, 2021; Leung et al., 2020; Petillion & McNeil, 2021; Wang & Ren, 2020). The overarching theme from the student feedback surveys indicates that video recordings have been effective in helping students learn scientific concepts. Students appreciated being able to watch the videos multiple times, which helped them understand the concepts at their own pace. To provide a specific example, students from a physics lab watched videos demonstrating the process of establishing a relationship between a pendulum’s length and time period to derive the relevant equations. The study findings suggested that repeated viewing enhanced a student’s ability to calculate acceleration due to gravity (Hamed & Aljanazrah, 2020). After watching lab recordings of data collection, students reported that they understood the concepts better by seeing the equipment and the types of measurements taken. This study also identified that 83% of physics students found the videos to help them understand the experiments before and during the hands-on laboratories (Hamed & Aljanazrah, 2020). The videos were effective in presenting concepts and explaining the experimental process. Survey results also reflected the negative feedback regarding the passive approach associated with using video recordings (Anstey et al., 2020; Leung et al., 2020; Petillion & McNeil, 2021; Wang & Ren, 2020). To address this issue, incorporating interactive lab videos could be a possible solution (Wang & Ren, 2020).

Online labs that used live-streaming videos: Three studies conducted in the domain of chemistry examined the effectiveness of live-streamed videos in delivering online labs, using surveys and semi-structured interviews to collect feedback on student satisfaction (Davy & Quane, 2021; Petillion & McNeil, 2021; Woelk & Whitefield, 2020). The survey results identified that students valued the live-streamed videos for creating a sense of participation during lab activities. While pre-recorded videos were generally preferred, live-streamed videos were valuable for offering real-time learning experiences and opportunities to observe procedural errors. However, concerns were raised about the effectiveness of live lab demonstrations in understanding specific lab equipment. Issues such as blurry videos and occasional lag were also mentioned. Students preferred a two-camera setup during online courses, using a smartphone to film the instructor and a separate web camera for a close-up view of the experiments. Overall, the results highlight the importance of providing real-time and engaging lab demonstrations to enhance the learning experience and student engagement.

Online labs that used desktop simulations: Two studies collected survey data to analyze students’ perceptions of using simulations (Anstey et al., 2020; R. Gao et al., 2020). In an organic chemistry class, students utilized a simulation platform to virtually perform and grasp the concept of electrophilic aromatic substitution reactions. Further, they were given mock laboratory notebook pages and analytical data as an exercise to identify potential errors. While students appreciated this experiment’s collaborative and creative nature, they longed for hands-on experimentation opportunities unavailable in the remote setting (Anstey et al., 2020). One study examined that 67% of the juniors and seniors (n =10) in a biochemistry lab were pleased with their overall remote learning experience incorporating simulations, while 33% were neutral. Further, when asked to compare their experience of online laboratories to in-person instructions, most students reported that virtual labs were effective (17%) or somewhat effective (50%). In comparison, 33% considered them less valuable (R. Gao et al., 2020). Overall, students found simulations beneficial for understanding concepts and practicing skills, particularly in scenarios where hands-on experimentation was not feasible. However, there was a clear preference for in-person labs, with students valuing the hands-on aspects of traditional laboratory settings. This highlights the importance of balancing the use of simulations with opportunities for hands-on learning to provide an effective learning experience for students.

Online labs that integrated video recordings and desktop simulations: Three articles examined the effectiveness of online labs integrating videos and simulations. Student and instructor perceptions were collected through student feedback surveys and instructor observations (Huang, 2020; Liang et al., 2020; Zhou, 2020). Based on instructors’ observations, the videos enabled students to understand the critical experimental protocol, principles, and precautions, while the simulations allowed them to conduct virtual experiments (Zhou, 2020). These instructor observations are supported by the students’ perceptions, with 93% of students rating this method as excellent and 7% as very good in a study conducted to identify the success and challenges encountered during the full implementation of online chemistry instruction (Huang, 2020). The student survey conducted in a mechanical measurements course revealed that students were satisfied with the remote teaching platform, which incorporated breadboard simulations and video demonstrations (Liang et al., 2020). A vital component of this platform was Breadboard Simulator, an open-source virtual breadboard software. It allowed students to understand the features of breadboards and utilize a virtual breadboard to connect and construct electrical circuits, enabling them to get the feel of working with actual circuits. The overarching theme identified from these studies is that integrating video recordings and desktop simulations in online labs was beneficial for both students and instructors. This integration offers different modalities of learning, providing a visual understanding of experiments and the opportunity for virtual performance. This enhances the productivity of learning, making it an effective strategy across various domains for facilitating learning and experimentation.

Online labs that used home lab kits: In a study in mechanical measurements course to determine strain in cantilever beams, survey results found that students preferred online labs incorporating home labs because they could work on physical equipment and experience its touch and feel (Liang et al., 2020). One study conducted a post-course survey to rate student perceptions of how efficient learning was through the kitchen chemistry lab (R. Gao et al., 2020). While most students found conducting a kitchen chemistry lab valuable, a few raised concerns regarding safety. For example, in the kitchen chemistry lab, students might use household chemicals or conduct experiments involving heat sources. One safety concern could be ensuring that students are properly trained in handling these chemicals and equipment to prevent accidents or injuries. The overarching theme identified from these studies is that students value the hands-on experience with physical equipment in home labs. However, addressing safety concerns and ensuring that home labs provide students with a safe and effective learning environment is essential.

Online labs that used remote programming lab: Two studies in the engineering domain analyzed the effectiveness of remote programming labs using a 5-point Likert scale (1 completely disagree and 5 completely agree) and a survey (Garcia et al., 2021; Leung et al., 2020). The Likert scale was used to analyze student opinions of a synchronous online platform used in a software engineering lab to solve programming problems using object-oriented and parallel programming. The results showed that students rated questions related to installation simplicity and infrastructure suitability for meeting each lab’s goals the highest. In contrast, the question regarding using remote labs to prevent cheating received the lowest. The anonymous survey with a 17% voluntary response rate on student satisfaction with a machine-learning module found that 85% preferred it over the video-recorded labs. This lab used at-home devices like smartphones to gather human motion data and subsequent analysis using machine learning modules. All students agreed that this module helped them review statistics and grasp the fundamentals of machine learning (Leung et al., 2020). These results indicate that incorporating remote programming labs can enhance student learning and satisfaction. However, measures should be taken to address concerns about preventing cheating in online lab environments.

Online labs that implemented a hybrid approach: The survey results from the remote partner model (where students collaborated online and onsite) found that almost 90% of students agreed that working in the lab with their remote partner helped their learning. Approximately 70% of students agreed that working remotely with their onsite companion helped their understanding, especially of theoretical information in the Infection Microbiology course (Koort & Åvall-Jääskeläinen, 2021). Most students agreed that the onsite experience helped them learn how to work securely in the sterile environment of the lab, and their comments indicated that they valued the “trial and error” teaching strategy of the onsite labs. However, only half of the students agreed that online participation in the laboratories helped them learn how to work safely and aseptically in the lab (Koort & Åvall-Jääskeläinen, 2021). Semi-structured interview results conducted with students in a physics lab revealed that rather than passively watching recorded videos, students enjoyed performing experiments online using interactive simulations (Hamed & Aljanazrah, 2020). For example, in an experiment titled “Measuring the Acceleration of Gravity,” students manipulated the length of a pendulum as the variable and conducted multiple measurements of the time period. They collected various readings, created graphs, and analyzed the data to uncover relationships between the variables (Hamed & Aljanazrah, 2020). The students also agreed that using a combination of videos and simulations in the virtual labs made them aware of the experimental protocols and procedures and, thus, made them feel more comfortable conducting the experiments in traditional labs. The results indicate that a hybrid approach combining online and onsite components can provide students with a comprehensive and engaging learning experience. Students can enhance their understanding, improve safety protocols, and save time by integrating online simulations and videos with traditional labs.

Online labs that used an online panel format: One study surveyed student perceptions about using their online panel format (tutorial team and learning team) in a chemical engineering lab (J. Gao et al., 2021). Nearly 92% of the students mentioned that the collaboration and communication between the two groups aided their understanding of the experiment’s nature and requirements. In comparison, 87% commented that the online panel format enhanced their data interpretation and analysis ability. According to 73% of students from the tutorial teams, discussing the questions in advance and participating in the question-and-answer sessions following the presentation helped them better comprehend and understand the experiments. In addition, 87% of the students agreed that the tutorial presentation of the lesson improved their core understanding of the procedure and helped them grasp the experiments. In the learning teams, 79% of the students responded that they felt more prepared to conduct these experiments than during their conventional pre-experiment preparation. Based on the findings from the student survey, this panel structure allowed for interactive dialogue between the tutorial and learning teams, which was substantially more effective than passive listening.

Online labs that used online learning platforms with data acquisition equipment: Student feedback surveys on the perceptions of the Lt® learning platform used in an anatomy and physiology lab were overwhelmingly positive, with students recognizing that the technology significantly enhanced their learning experience (Stokes & Silverthorn, 2021). When comparing in-person labs to the online format with the integration of the learning platform, students found the online version much easier to follow. However, some students expressed negative feedback regarding specific experiments, finding them boring, confusing, or difficult in the virtual format. Whether using paper handouts in face-to-face delivery or computer-based learning in the virtual platform, they found the online version more organized. The comments from students regarding the two virtual laboratories, specifically the endocrinology and anatomy and histology labs, at the end of the course highlighted the importance of instructor participation and collaborative work in the online format (Stokes & Silverthorn, 2021).

Online labs that used visual tutors with video recordings: Based on student feedback collected using a survey in an electrical and computer engineering course using a digital electronics visual tutor, students expressed increased confidence in engaging in self-study while guided by the instructor (George, 2020). Students appreciated the wide range of learning resources available on the online platform, including the visual tutor and the newly introduced course textbook. In their overall assessment, students rated the Digital Logic Theory for Engineers Classic Workbook, Digital Electronics Visual Tutor, Port-Mapping Tool for Digital Logic Design, and Mock Quiz Feedback Documents as the most beneficial aspects of the modified teaching methodology.

Online labs that used analysis of previous year data to replace lab work: Feedback from students who participated in online education during the lockdown semesters of two different groups of a 5-year program in Chemistry, Environment, and Chemical Engineering was collected using an online survey to identify the success and challenges during online education (Dietrich et al., 2020). Due to the pandemic, some practical lab work had to be canceled. This decision received mixed responses from the students, with 33.3% considering it a good solution, 28.6% disagreeing, and 38.1% remaining neutral. Students mentioned that the attempts to maintain the continuity of lab work proved to be more time-consuming and exhausting. The approach of replacing laboratory work with just the analysis of previous data was rejected by 61.2% of the students because this merely involved numerical calculations performed autonomously. However, the same approach with the presence of an educator through video conferencing in small groups was appreciated by 44.7% of the students. It was observed that students studying disciplines related to engineering science, where hands-on practical work is crucial, were particularly frustrated by the loss of the practical aspect of the lab work. On the other hand, some students highlighted that the theoretical aspect was covered in greater depth, leading to a better understanding of the course material.

Based on the analyses of these studies, integrating various methods, such as video recordings with interactive elements, live-streamed videos, simulations, and home labs, can enhance student learning and engagement in online labs. Nevertheless, a hybrid approach that combines in-person and online experiments can be ideal, even in traditional classroom settings, as it saves time and provides an enhanced learning environment for the student.

3.3. Challenges Faced by Institutions in Transitioning to Online Platforms

The unexpected transition to online delivery of labs caused by the COVID-19 pandemic led to several challenges across STEMM education. The significant barriers in the studies reviewed included technological challenges, workload and expertise constraints, academic integrity, and lack of student engagement.

Lack of access to technology: The articles reported that the lack of access to the internet and the technology needed for the online delivery of labs was identified as an ongoing challenge during the pandemic (Aguirre & Selampinar, 2020; Anstey et al., 2020; Anzovino et al., 2020; Chierichetti & Backer, 2021; Choate et al., 2021; R. Gao et al., 2020; Huang, 2020; Kolack et al., 2020; Tran et al., 2020). As both instructors and students moved to remote teaching and learning, access to institutional services was lost. Some areas, particularly remote ones and those serving marginalized populations did not have the Internet and network technology required to support online education (Englund et al., 2017; Huda et al., 2018). More than two-thirds of students had problems with intermittent Internet connectivity during the spring of 2020. More than 50% of the students had issues with a lack of physical study space and webcam unavailability (Chierichetti & Backer, 2021). Students often shared laptops with family members as they worked or attended school remotely. Furthermore, internet access was limited as students’ access to high-quality Wi-Fi was not as readily available at home as at campus - an especially prominent issue in remote areas and underserved communities. These issues were mitigated to a certain extent by instructors providing loaner laptops and portable Wi-Fi connections (Anstey et al., 2020; Anzovino et al., 2020; Kolack et al., 2020; Tran et al., 2020; Zhou, 2020).

Workload and expertise constraints: Workload and expertise constraints were challenges impacting an effective and efficient transition to an online platform for instructors and students (Aguirre & Selampinar, 2020; Chierichetti & Backer, 2021; Choate et al., 2021; Tran et al., 2020). Instructors were required to use skills and expertise outside their education and teaching experience (Chierichetti & Backer, 2021). This study further documented that more instructors reported using audio and video conferencing tools, followed by webcams, online videos or tutorials, and YouTube after shifting to the online platform. The study also identified that 70.4% of the instructors responded that they had to spend more time preparing the online course materials than traditional in-person methods. Reformatting/reinventing the labs and the need to provide technology support for their less experienced teaching assistants and students added to the instructors’ workload. In addition, it was difficult for students to quickly adapt to new learning formats, which posed challenges in becoming accustomed to online platforms. The students described their experience as more negative because their perceptions of the classes in spring 2020 were different. After classes transitioned to online mode in April 2020, a survey of students at a prominent Texas institution reported that 71% of respondents reported increased stress and anxiety, while almost 90% experienced difficulty maintaining focus (Son et al., 2020). More students started scheduling one-on-one meetings with the instructors, mainly because they required emotional support and academic help (Aguirre & Selampinar, 2020; Chierichetti & Backer, 2021). To help with the transition, instructors also supplied a wealth of materials on online learning, time management, and stress management.

Academic integrity: Some of the articles identified a lack of online proctoring guidelines, raising academic integrity issues (Aguirre & Selampinar, 2020; Anzovino et al., 2020; Chang et al., 2021; Choate et al., 2021; Dietrich et al., 2020; Garcia et al., 2021; Huang, 2020; Stokes & Silverthorn, 2021). Students were inconvenienced by the need to use two devices, one to complete an exam, for example, and another to monitor themselves (Huang, 2020). Several recommendations for addressing academic integrity issues are provided. For instance, one study found using a computer monitoring system (Veyon) across remote labs effective for recording students during lab exams, while others generated a 360-degree scan of the room where the students were taking the exams or using lockdown browsers to ensure integrity (Aguirre & Selampinar, 2020; Anzovino et al., 2020; Chang et al., 2021). Other instructors adapted to using presentations rather than closed-book lab exams and assignments (Choate et al., 2021; Dietrich et al., 2020; Stokes & Silverthorn, 2021). These presentations offer the added benefit of improving communication skills while ensuring students have a solid comprehension of the concepts.

Lack of student engagement: Finally, irrespective of the platform, none of the online labs could engage the students in a complete lab experience that included both technical skills and non-technical experiences (Buchberger et al., 2020; Choate et al., 2021; Huang, 2020; Liang et al., 2020; Wang & Ren, 2020). For example, it was difficult for virtual labs to provide an authentic lab experience to the students, involving apparatus selection, washing and handling glassware, waste disposal, and using goggles and gloves (R. Gao et al., 2020). The challenges of virtual learning were amplified by the reduced interaction between instructors and students, the absence of real-time feedback, and the lack of opportunities for teamwork, making it difficult to provide a fully authentic lab experience.

The lab transition from in-person to online platforms was undoubtedly challenging. IHEs made considerable efforts to navigate through the difficulties brought on by the pandemic. Among the various challenges faced, the lack of access to technology stood out as the most prominent concern, highlighted by nine out of the 34 studies reviewed. However, the IHEs were proactive in finding timely solutions to address this issue and also made efforts to maintain academic integrity to a certain extent. However, dealing with the workload and expertise constraints and ensuring a comprehensive lab experience with adequate student engagement required additional effort and careful planning to overcome these challenges.

4. Discussion

In this section, we synthesize the findings from reviewed literature on how educational institutions mitigated challenges related to enhancing the learning experience and student engagement in lab courses, technology and software competency, and academic integrity during the pandemic to ensure academic continuity. The synthesized findings have been incorporated into suggestions that institutions may implement to maintain academic continuity. These suggestions focus on developing an academic continuity plan with a primary emphasis on maintaining teaching and learning. 1) Identify or develop evidence-based digital learning tools and software that support learning outcomes; 2) Provide synchronous and asynchronous sessions while choosing evidence-based digital tools to enhance student engagement and learning effectiveness; 3) Provide preemptive training in software and technology for instructors and students to prepare them for current technologies; 4) Develop instructions and guidelines for assessments to uphold academic integrity; 5) Continuously improve online lab courses by integrating student and instructor feedback and addressing any challenges encountered.

Identify or develop evidence-based digital learning tools and software that support learning outcomes: The findings from this review suggest that instructors employed video recordings, desktop simulations, and home labs individually or in combination to continue teaching and learning. It is worth considering expanding the availability of well-planned commercial lab kits across various domains to provide more opportunities for hands-on practice. Safety concerns from home lab kits can potentially be addressed using Extended Reality (XR) technologies. XR technology is a broad term that includes various immersive technologies like Mixed Reality (MR), Augmented Reality (AR) and Virtual Reality (VR) (Kwok & Koh, 2021). Students can engage in simulated laboratory environments that provide a sense of working with real equipment while minimizing safety risks using XR technologies. This suggestion is supported by recommendations from other studies on the efficacy of XR technologies to enable learners to safely familiarize themselves with procedures on glove hygiene (Broyer et al., 2021), perform surgery on patients (Lohre et al., 2020) or identify potential safety hazards in a manufacturing unit (Madathil et al., 2017). In a conventional undergraduate laboratory, even with proper hygiene instruction, students still require constant reminders to take off their gloves in order to maintain hygiene and prevent cross-contamination. However, the VR lab seemed to be an effective tool for training students in glove hygiene. It reinforced the importance of glove hygiene by visually demonstrating the invisible spread of chemicals from improper glove use, providing immediate visual feedback to students (Broyer et al., 2021). This method involved an active learning application, where students could visually track the spread of germs and receive feedback, enhancing their learning compared to traditional instruction (Broyer et al., 2021). A study conducted in orthopedic education identified that participants in the immersive VR group were seen to complete a difficult multistep surgery task faster than the traditionally trained group (Lohre et al., 2020). The immersive VR group exhibited improved instrument handling, which may have arisen from the VR module’s immersion aspect, enabling the users to virtually perform the task rather than passively reading a comprehensive traditional journal article outlining the steps by the users in the control group. Another approach to offering authentic experiences involves creating Extended Reality Remote Laboratories (XRLs) (da Silva et al., 2023). Remote laboratories enable real experimentation anywhere at any time. But the biggest problem with them is that there needs to be immersion. However, by incorporating AR and VR methods into remote experimentation, XR can address this drawback and create XRLs, which can be Virtual Reality Remote Laboratories or Augmented Reality Remote Laboratories.

Provide synchronous and asynchronous sessions while choosing evidence-based digital tools to enhance student engagement and learning effectiveness: Maintaining student engagement on online platforms is challenging but can be addressed through direct interactions with instructors and peers. While synchronous, real-time, video-based delivery can facilitate such interactions, it may be infeasible due to differing time zones or technology issues. In these instances, instructors found it practical to use asynchronous methods. These strategies are consistent with recommendations from another study, emphasizing the use of technological tools synchronously, asynchronously, or in an integrated manner to maintain student engagement and involvement in the course effectively (García-Morales et al., 2021). According to the systematic review conducted by Garcia-Morales et.al (García-Morales et al., 2021), numerous methods for teaching can be employed with the current technological tools. To name a few, there are video conferences for lectures, presentations and slideshows for material exchange, and chat for interaction. However, all of these techniques may be utilized either synchronously or asynchronously, or blended to keep students engaged in the courses and maintain their attention (García-Morales et al., 2021). Another potential strategy is providing online team collaboration spaces, collaborative activities, group discussions, and other forms of student interaction to enhance student engagement and learning effectiveness. This suggestion is supported by studies recommending the creation of a virtual community of practice to improve peer engagement and collaborative learning experiences among students (Carolan et al., 2020; Gamage et al., 2020; Müller & Ferreira, 2005). Müller and Ferreira (Müller & Ferreira, 2005) identified that in several MARVEL (Virtual Laboratory in Mechatronics: Access to Remote and Virtual E-Learning) learning scenarios, students engage in collaborative activities to enhance their learning effectiveness. The MARVEL is a project that provides a framework to analyze the pedagogic effectiveness of online labs. It uses remote and MR techniques to conduct online labs that support learning through experiential and collaborative learning. Through these collaborative activities, students cooperate as peers, using their collective knowledge to solve problems. The conversation arising from this collaborative effort gives students the chance to continuously assess and improve their understanding of the topic. Breakout rooms from video conferencing software such as Zoom provide a feature for online team collaboration. Using this feature, students can discuss their projects in smaller groups without being interrupted or distracted by other groups. However, to ensure a balanced and well-coordinated learning experience, instructors should carefully plan and communicate the duration of Zoom sessions and collaborative activities. This may help prevent students from becoming disengaged due to lengthy Zoom sessions and inappropriate learning activities. Another approach to enhancing student learning and engagement is to provide additional learning resources to keep them motivated. These resources can be made available through shared repositories and online libraries.

Provide preemptive training in software and technology for instructors and students to prepare them for current technologies: During the pandemic, students and instructors faced additional pressure as they had to learn to use new technologies and software. Providing preemptive software and technology training to instructors can help them implement engaging simulations and effectively use web conferencing platforms like Zoom. These training sessions can enable the instructors to efficiently navigate any disruptions caused in the future. These suggestions are consistent with the review conducted on ways to maintain academic continuity, paying attention to the digitalization of learning processes, and offering specific technical training to professors, administrative staff, and students (García-Morales et al., 2021). The review highlighted that the main challenges identified by professors in adapting to online learning included the need for specific computer skills, communication proficiency in online settings, and familiarity with various digital tools and technologies. Additionally, the review pointed out that universities might face budget constraints when adopting new technologies and covering training costs. Further investments may be necessary to enhance digital infrastructure.

Develop instructions and guidelines for assessments to uphold academic integrity: Academic dishonesty in online or remote courses is a significant concern for IHEs (Holden et al., 2021). To address the academic dishonesty issues, IHEs need to establish guidelines and rules during the initial planning phases of online education. One effective method is using the Multiple Attempts Format (MAF) in online assessments (Estidola et al., 2021). MAF is a testing tool that allows students to make multiple attempts in online assessments by providing immediate feedback and letting them decide if they need to make the next attempt. Students reported that MAF allows them to improve their scores honestly and strengthens their commitment to academic integrity (Estidola et al., 2021). Teachers agreed that MAF teaches students about responsibility, decision-making, and risk-taking to enhance their scores. Fostering self-regulated learning skills, where learners set goals and manage their learning, can contribute to increasing academic integrity (McAllister & Watkins, 2012). Course design strategies such as reducing exam weight and providing prompt feedback are recommended for maintaining academic integrity.

Continuously improve online lab courses by integrating student and instructor feedback and addressing any challenges encountered: The reviewed articles explored the challenges students and instructors faced in conducting online labs. We can identify institutions’ major barriers to transitioning to the online platform by analyzing these challenges. Feedback from students and instructors is important in identifying and mitigating these challenges, ensuring a successful transition, and improving the online lab experience.

In conclusion, the review recommends that by implementing the suggestions outlined above, institutions can be better prepared to overcome challenges and maintain academic continuity during future disruptions. Integrating video recordings, desktop simulations, expanding commercial lab kits, and XR technologies offers a comprehensive laboratory experience in online education. Strategies like direct interactions, synchronous and asynchronous methods, and collaborative activities are essential for maintaining student engagement. It is equally important to establish clear rules to maintain academic integrity. These findings highlight the necessity of adapting to new technologies and strategies to ensure effective teaching and learning on online platforms. When institutions close due to inclement weather, they often provide e-learning options. However, institutions may utilize these days to pilot test online laboratories, designating them as official digital learning days. This approach would allow teachers and students to become more acquainted with the online labs, enhancing their ability to use and navigate these platforms effectively.

4.1. Framework for Transitioning Lab Courses to Online Platforms

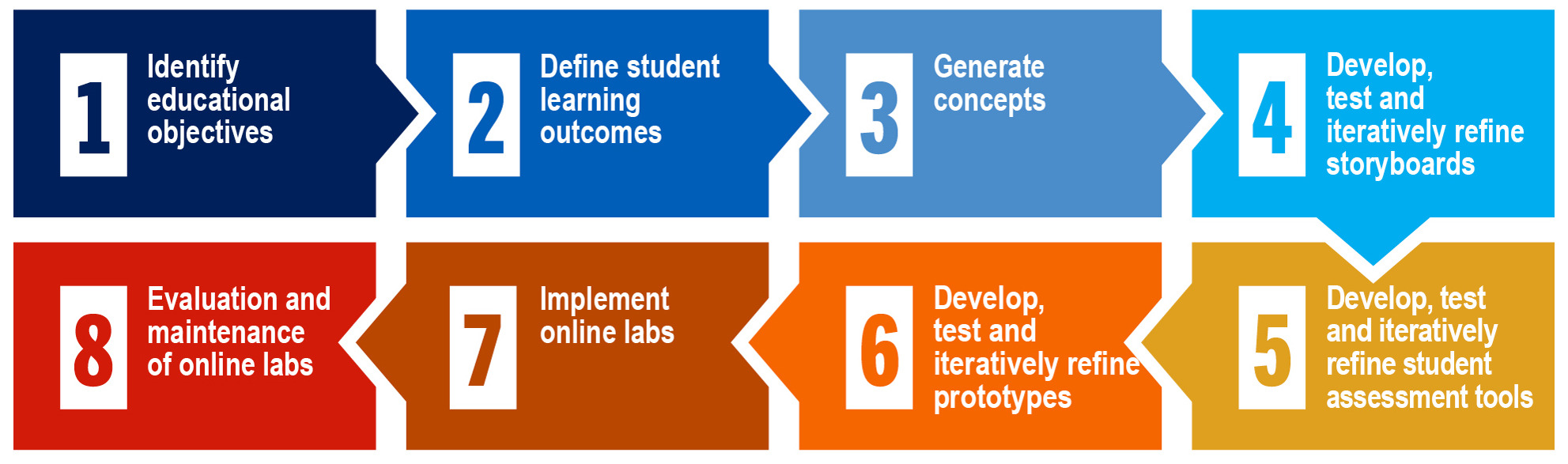

Transitioning lab courses from in-person to online platforms can be challenging. The literature review identified how institutions utilized various strategies to conduct online labs. Many relied on readily available simulations or recorded videos of their lab demonstrations. However, designing the labs according to the course’s learning outcomes could enhance the learning experience instead of solely relying on available resources. A framework for creating online labs is proposed, which institutions may use to tailor their labs. The product design and development process was used to create this framework for developing online labs (Ulrich et al., 2008). Like any new product development, creating online labs requires a dedicated team consisting of educators, industry partners, instructional designers, interaction designers, and software developers. Educators and industry partners are the Subject matter experts (SMEs) who provide insights and requirements for the development of the lab course. Instructional designers create new courses and curricula, redesign existing courses, develop training materials such as teaching manuals and student guides, conduct quality checks, and implement feedback from program reviewers. Interaction designers understand the complex lab processes and simplify them into clear models and concepts. Software developers determine how to make the online lab design possible, provide feedback on the difficulty of implementing various design aspects, and finally implement the system. Table 3 outlines the digital learning tool development process and each team member’s role throughout the phases. A visual representation of the framework and its various phases is illustrated in Figure 5. Using this framework, institutions can develop lab courses and increase the likelihood of a smooth and successful transition to the online platform. A brief explanation of each phase is provided below, and an illustration showing the activities and outcomes associated with each phase is presented in Figure 6. This framework is developed specifically for online labs involving digital learning platforms and not for online labs incorporating home labs.

Phase 1 - Identify educational objectives: Educational objectives outline what an instructor or course aims to do (Course Objectives & Learning Outcomes, n.d.). These objectives can be identified by reviewing accreditation criteria and conducting cognitive task analysis (CTA) (Clark & Estes, 1996) of SMEs. CTA is developed by conducting observational studies, focus groups, and interviews to understand the demands placed on the student, as well as the knowledge and specific skills required for working in the labs (Clark & Estes, 1996). These insights help the development team create more relevant learning experiences and streamline learning objectives, ultimately supporting students in achieving academic and professional success.

Phase 2 - Define student learning outcomes: Learning outcomes refer to the knowledge, skills, and abilities students acquire during their studies (Criteria for Accrediting Engineering Programs, 2021 – 2022, n.d.). The development team identifies specific and measurable learning outcomes consistent with the course’s goals, level, and content. The student’s needs and expectations, covering the cognitive, affective, and psychomotor domains, are also considered. Utilizing frameworks like Bloom’s Taxonomy (Bloom et al., 1956) the team can create comprehensive and appropriate learning outcomes that benefit students completing highly kinesthetic STEMM labs. In the context of lab courses, some examples of learning outcomes may include identifying and analyzing the basic experimental principles, demonstrating the ability to conduct appropriate experiments, collecting and analyzing data, formulating results, and effectively communicating findings through well-written lab reports (Criteria for Accrediting Engineering Programs, 2021 – 2022, n.d.).

Phase 3 - Generate concepts: The development team can interview lead users and experts, conduct literature reviews, or perform patent searches to identify feasible strategies for conducting online labs. This can help them develop initial concepts for the lab course and determine the best strategy for transitioning it to an online platform. Our literature review identified several instructional strategies for conducting online labs, including video recordings, desktop simulations and XR technologies. The team can also leverage existing content libraries such as Open Educational Resources Commons (Gallaway, n.d.), Massachusetts Institute of Technology OpenCourseWare (MIT OpenCourseWare, n.d.), and Skills Commons (Home - SkillsCommons Repository, n.d.) to select appropriate educational materials for their needs. Strategies identified can be refined to ensure that the final learning outcomes align with the course goals and are accessible to all students. These concepts can be presented through sketches of the proposed strategies with brief textual descriptions.

Phase 4 – Develop, test, and iteratively refine storyboards: Storyboarding is a widely used technique in Human-computer interaction design to communicate a temporal sequence of actions in a particular lab activity (Ulrich et al., 2008). This method can provide a quick and easy way to modify designs, allowing for changes to be made before virtual lab software development begins. Storyboarding enables teams to evaluate potential content for inclusion in online lab platforms. Sketches, videos, and animations can be used to create storyboards based on the ideas generated in the concept phase (video recordings, simulations and XR technologies), aligning with the desired learning outcomes. The storyboarding phase undergoes several iterations and refinements based on the CTA, with feedback from SMEs. Through this process, the team can create models of online labs using the instructional strategies identified in the concept generation phase. These models can effectively demonstrate how users can interact with the system, which will allow the team to perform iterative refinement.

Phase 5 – Develop, test, and iteratively refine student assessment tools: As part of the student evaluation process, educators can use assessment tools to collect and analyze data to evaluate student outcomes and achievement levels (Criteria for Accrediting Engineering Programs, 2021 – 2022, n.d.). Formative assessment can provide students with feedback on their learning progress throughout the learning process (Glazer, 2014). It includes various assessment methods such as open/closed-ended response questions, essays, and performance tasks like poster presentations and projects. On the other hand, summative assessment can be used for grading, evaluation, or certification purposes for measuring the students’ learning outcomes at the end of a term, semester, or year. Summative assessment includes closed-ended questions such as multiple-choice questions, true or false statements, and fill-in-the-blank exercises (Glazer, 2014). Methods like Rasch analysis may also be used to analyze the learning gains (Bond et al., 2020). Learning management systems (LMS) may be used to administer quizzes and provide feedback. In addition to LMS, other digital tools like Kahoot! can combine game dynamics with the ability to monitor student learning (Correia & Santos, 2017). Kahoot enables teachers to create interactive quizzes, surveys, and discussions, fostering student learning through a dynamic and engaging game format (Dellos, 2015).

Phase 6 – Develop, test, and iteratively refine online lab prototypes: Institutions can employ multiple digital technologies and software that simulate real-world laboratory experiences to provide students with flexible and convenient practical learning opportunities. For instance, simulations can be built using software such as Unity, and XR equipment can be used to provide an immersive experience. Similarly, videos can be recorded using high-definition cameras and edited using tools such as Adobe Premiere Pro or Camtasia. The online labs are developed based on the sequence of the lab procedures finalized in the storyboarding phase. During the testing and refinement phase, the online lab prototype can be put through multiple evaluations to improve its usability and functionality (Ulrich et al., 2008). These usability evaluations can be done using heuristic evaluations (Nielsen, 1994) and subjective assessment tools such as the System Usability Scale (Brooke, 1996). The team can then refine and modify the prototype online platform based on the feedback received in preparation for the implementation phase.

Phase 7 – Implement online labs: In this phase, online labs are made available to the instructors and students using various digital tools and software. The design team can create tutorials to help stakeholders understand how to use the online labs. These tutorials can provide instructions on using the various software and navigating the online lab platform. Additional testing might also be required to ensure the online labs function as intended during the testing phase. This phase also serves to gain insights into user interactions with the system.